What is a humanoid robot

Definition

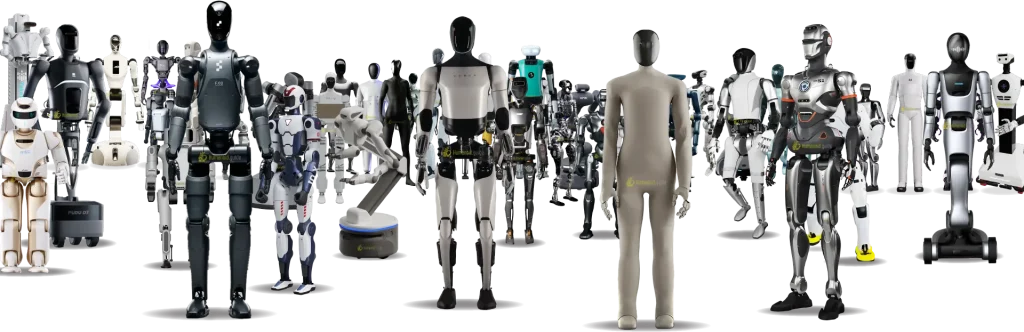

A humanoid robot is a robot designed to resemble the human body in shape and function, typically with a head, torso, two arms, and two legs. This human-like form is not just for looks – it allows it to operate in environments built for humans, using tools, climbing stairs, opening doors, and performing other tasks originally meant for people. In essence, a humanoid robot mimics human movements and actions so it can work alongside people or in places designed for people (unlike a factory robot confined to a cage).

A modern humanoid robot is powered by advanced AI and can perceive its surroundings, make decisions, plan actions, and carry out complex tasks autonomously. It has made huge strides in recent years thanks to progress in artificial intelligence (especially large language and vision models), improved sensors and actuators, and cheaper computing power. Typically, a humanoid robot has dozens of joints or “degrees of freedom” (>20 DOF), giving it human-like flexibility but also making control and balance a serious challenge. Building such a robot is a multidisciplinary effort – bringing together mechanical engineering, electronics, and AI – and represents one of the most exciting frontiers in robotics today.

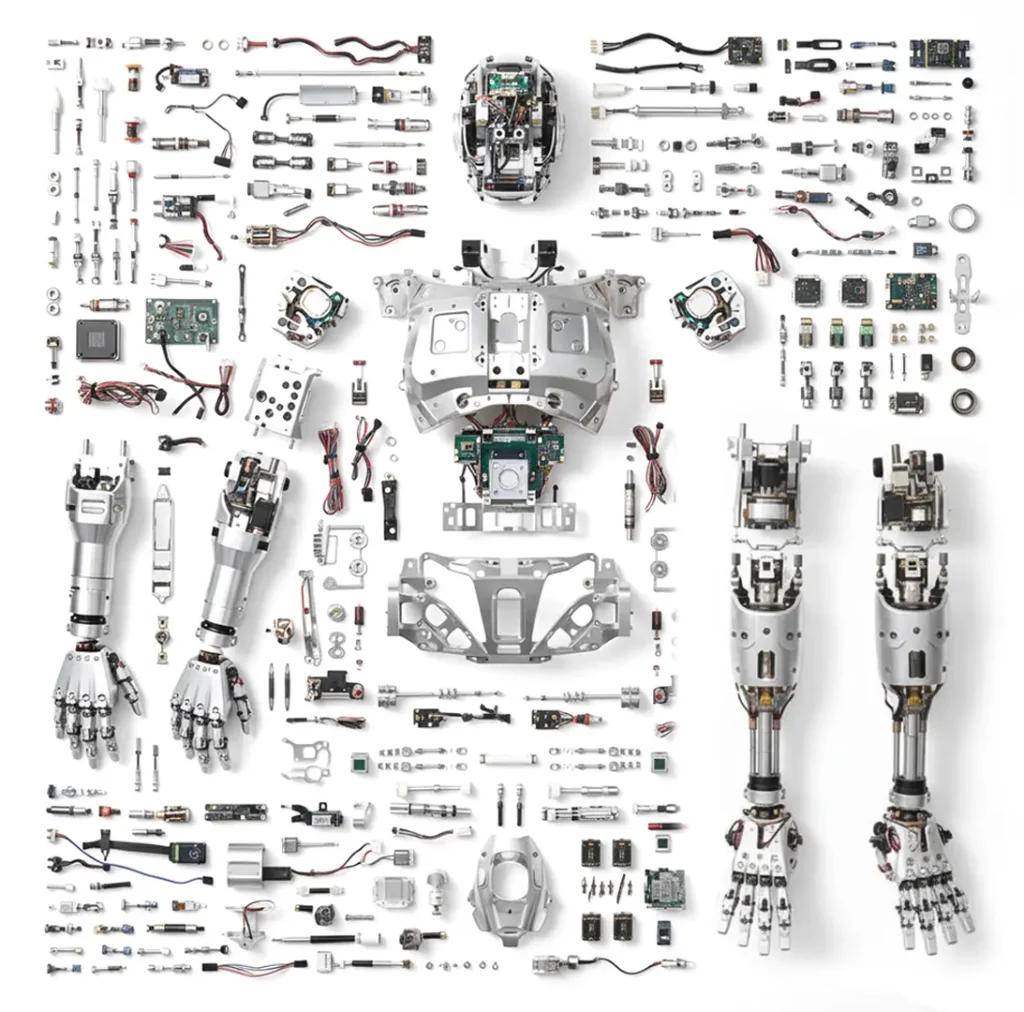

Anatomy and Key Components of a Humanoid Robot

An example of a humanoid robot’s mechanical anatomy. The humanoid form includes arms, legs, torso, and head, with numerous joints and actuators mimicking a human skeletal frame.

At a high level, humanoid robots have the same core components as any robot – a body (structure and motors), sensors, actuators (motors and moving parts), and a control computer (the “brain”). However, humanoids integrate a complex array of advanced technologies to achieve human-like mobility and interaction. High-performance hardware is critical. For instance, a humanoid robot’s body contains powerful electric actuators (motors at the joints) often combined with sophisticated gear systems (e.g. harmonic drives or planetary reducers) to deliver human-like motion. These actuators serve as the robot’s “muscles,” controlling movement and providing the torque needed for walking, lifting, and manipulation. The robot’s skeleton or frame (often lightweight metals or composites) gives it structure, analogous to bones, and houses the motors and wiring.

Sensors

Sensors are the robot’s “sense organs,” enabling it to perceive both its internal state and the external world. Internal sensors (proprioceptive sensors) measure joint positions, motor speeds, and forces – much like how our inner ear and muscle nerves sense body posture. Humanoid robots typically use gyroscopes and accelerometers (an inertial measurement unit, IMU) to sense their orientation and balance.

They also have joint encoders and torque sensors at key joints to measure movement and effort, which are crucial for maintaining balance and detecting contact forces. External sensors (exteroceptive sensors) give the robot awareness of its environment. Most humanoids have vision systems – usually cameras in the head serving as eyes – to recognize objects and depth (sometimes paired with lidar or depth cameras for 3D vision). They may have microphones to hear sound or speech, and touch sensors on the body or in the hands (tactile pads, force sensors in fingertips, etc.) to feel contact and gauge how firmly they are gripping objects. Altogether, these sensors allow a humanoid to construct a real-time picture of what is around it and how its body is positioned.

Power Supply

To power all this, humanoid robots carry an on-board energy source, typically high-density battery packs (often lithium-ion). Batteries are usually stored in the torso or backpack of the robot. Because walking and balancing are energy-intensive, power management and thermal management are important technologies included in humanoid design. Engineers must balance battery capacity with weight – a heavier battery lasts longer but makes the robot harder to balance and move. Some cutting-edge humanoids also incorporate custom power systems and cooling systems to support their motors and on-board computers.

Computing and Control Units

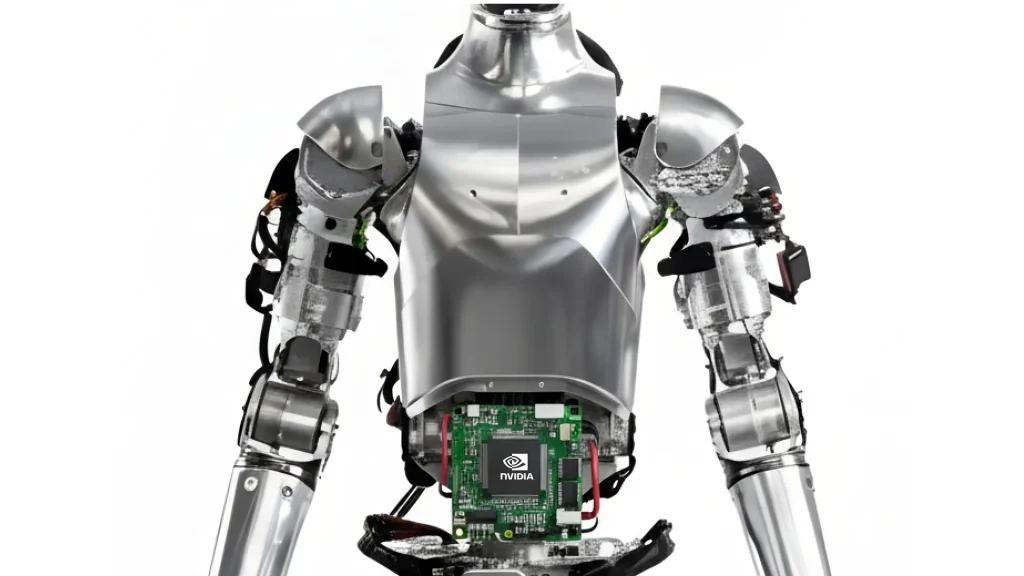

Another critical component is the on-board computing system – the robot’s electronic “brain.” Modern humanoids are equipped with one or several powerful processors (CPUs), often alongside AI accelerators or GPUs for heavy computations like vision and neural networks. These computers run the control software and AI algorithms that enable perception, planning, and autonomy.

A humanoid typically has distributed control: low-level microcontrollers may reside near the joints to handle fast motor control loops, while higher-level computers process sensor data and make decisions. Indeed, humanoid robots require numerous high-performance chips for motion control, perception, and decision-making tasks. This reliance on advanced semiconductors and AI processors means the robot’s electronics (circuit boards, communication buses, etc.) are as much a part of its anatomy as the mechanical limbs.

Summary of Anatomy

- Mechanical Structure & Actuators: Rigid links (limbs) connected by joints with electric motors or other actuators, plus gears/springs for force transmission. These provide human-like degrees of freedom in arms, legs, hands, etc., enabling complex motions.

- Sensors: Cameras (eyes) for vision, IMUs (inner ear) for balance, force/torque sensors (touch), microphones (ears) for sound, and joint sensors. These give the robot awareness of itself and its environment.

- Power Supply: Batteries and power electronics to drive motors and computers, often with thermal management to dissipate heat.

- Computing and Control Units: On-board processors (with AI chips) running the robot’s operating system and control algorithms – effectively the brain and nervous system coordinating everything.

All these parts work in unison to allow a humanoid robot to move, sense, and interact. If any component is lacking – for example, weak motors, poor balance sensing, or slow processors – the robot’s performance suffers. That’s why humanoid robots are considered one of the most challenging robotic systems to build: they demand cutting-edge tech from multiple domains (mechanical, electrical, and AI) integrated into a compact, human-shaped form.

Software Stack and Control Systems

While hardware forms the body of a humanoid, it is the software and control system that brings it to life. The software stack of a humanoid robot is often layered into a hierarchy of control, analogous to how the human nervous system has a brain for high-level thinking and reflex loops for low-level motions. In fact, engineers sometimes refer to the architecture in those terms – an AI “brain” for higher-level reasoning, and a motion control “little brain” for managing detailed movements.

High-Level AI Control (the “Brain”)

At the top of the stack is the AI system, which handles perception, understanding, and decision-making. This is the robot’s cognitive layer – it takes in processed sensor information and decides what the robot should do next. It includes the AI algorithms and models (for example, vision recognition, language processing, or task planning algorithms) running on the main processor or AI chips.

This layer performs tasks like interpreting camera images to recognize an object, understanding a voice command, or planning a path through a room. Based on this understanding, the high-level planner issues goals or commands to the lower-level controllers (for instance, “walk forward 3 steps” or “pick up the cup on the table”). Modern humanoid robots often run sophisticated robot operating systems (such as ROS 2 or custom frameworks) to integrate all these functions. They maintain internal models of the world and use algorithms like motion planners and behavior trees to decide on actions. Because humanoids operate in unstructured, dynamic environments, this AI layer must be adaptive and robust, handling a lot of uncertainty. For example, the robot might use SLAM (Simultaneous Localization and Mapping) algorithms to navigate unknown spaces, or run real-time object detection to avoid obstacles.

Low-Level Motion Control (the “Little Brain”)

Below the AI layer is the motion control system, which is responsible for executing the movements smoothly and keeping the robot balanced and safe. This is akin to the subconscious reflexes and motor coordination in humans. The motion control layer includes dedicated controllers and algorithms that translate high-level commands (like a desired walking speed or arm trajectory) into precise coordinated motor actions for each joint. Key components of this layer are balance control, gait generation, and kinematics. For a bipedal humanoid, balance and locomotion control is extremely complex: the controller must continuously adjust motors in the legs and shift weight to prevent falls (often using a zero-moment point or other dynamic balance strategy). If the robot is told to walk forward, the motion control system plans footstep placements, times the joint movements, and adjusts on the fly if it slips or if the ground is uneven. This layer often runs on a real-time computer or microcontroller to ensure fast feedback loops (on the order of milliseconds). It reads data from gyroscopes, foot pressure sensors, etc., and issues motor torque commands very rapidly to maintain stability. In addition to walking, the motion controller handles manipulation (coordinating arm and hand joints when grasping objects) and other body movements. Modern approaches use techniques like whole-body control, which coordinates all limbs when, say, bending to pick something up, so the robot doesn’t topple. In summary, the “little brain” makes sure the robot’s motions are physically feasible and balanced at every moment.

Integration and Middleware

Between the high-level AI and low-level control, there is often a middleware or executive layer that manages communication and data flow. This ensures that perception data (camera images, lidar scans, etc.) are processed and fed into the decision algorithms, and that the resulting motion commands are sent to motor controllers. Many humanoids use a publish-subscribe architecture (like ROS) where sensor nodes, control nodes, and AI nodes exchange information in real time. For example, a camera node might publish a detected object’s location, the AI planning node subscribes to that and decides to reach for it, and then sends a grasp command to the arm control node.

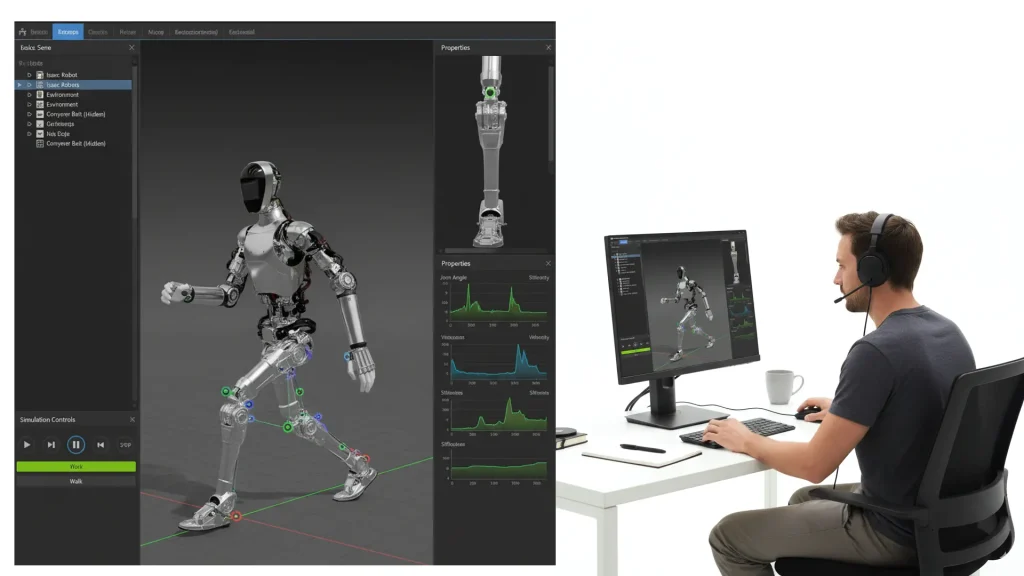

Simulation and Offline Training

Crucially, humanoid robots often rely on simulation and offline training as part of their software stack. Before attempting a complex behavior in the real world, robots can practice in physics simulators. For instance, Boston Dynamics’ Atlas learned to perform dynamic moves like backflips and parkour by training extensively in a simulated environment. In simulation, the AI and control software can experience thousands of falls and fine-tune its policies, and then transfer that learning to the physical robot. This greatly accelerates development, as damaging real hardware is costly. Simulation also allows testing of the software stack against many scenarios (different terrains, obstacles, etc.) to make the control policies more robust.

Software Stack Summary

Overall, the software stack of a humanoid is a complex, layered system coordinating perception, cognition, and motion:

- Perception Modules: process raw sensor data (camera images, audio, etc.) into useful information.

- Planning and Decision Module: sets goals, chooses actions, and sequences tasks.

- Motion Planning and Control: converts a desired action into a joint trajectory and executes it with balance and accuracy.

- Safety and Reflex Layer: monitors for issues and can override with emergency behaviors.

- Communications Layer: manages networking and potential offloading, while many systems aim for full on-board operation.

Engineers often emphasize that software is the soul of the humanoid: While the hardware provides the necessary capabilities (strength, speed, sensors), it is the sophisticated software that coordinates everything and imbues the robot with autonomous behavior. Industry experts note that mastering advanced motion control algorithms and AI “brain” software is key to leading in humanoid robotics – the robot’s intelligence and fluidity of movement ultimately come from its software stack.

AI and Machine Learning in Humanoid Robots

Early humanoid robots were pre-programmed to execute specific movements, but modern humanoids leverage AI and machine learning to act intelligently and adapt to new situations. AI is what enables a humanoid robot to go from just a moving mannequin to a true autonomous agent that can perceive its environment and decide on actions. Several categories of AI technologies are integrated into today’s humanoid robots:

Computer Vision and Perception

Humanoids use advanced AI models to interpret camera images and other sensor data. Convolutional neural networks and vision transformers allow the robot to recognize objects, people, and scenes in real time. Depth sensors combined with AI algorithms help in obstacle avoidance and mapping. Vision-Language Models are emerging as well – these AI models can both see and understand language, enabling the robot to follow verbal instructions about objects in the environment. An example is 1X Technologies’ “Redwood” AI model, a vision-language transformer tailored for a humanoid robot that can perform mobile manipulation tasks like retrieving objects, opening doors, and navigating a home. Redwood runs on the robot’s onboard GPU and allows the robot to learn from real-world experience, showing how cutting-edge AI helps humanoids handle varied, unstructured environments.

Natural Language Processing

To interact naturally with humans, some humanoid robots include AI for speech recognition and language understanding. This lets a person give spoken commands or ask questions. Large Language Models (LLMs) can be used so the robot can understand context and respond conversationally. For instance, a humanoid home assistant might use an offboard speech-to-text and text-to-text model to parse a user’s request, then convert it to an action goal for the robot’s own planner. While still a developing area, integrating language AI means humanoid robots can follow complex instructions (“Can you fetch my glasses from the bedroom upstairs?”) and even explain their actions or answer questions.

Motion Planning and Control AI

Walking on two legs and manipulating objects with dexterous hands are extremely complex control problems. Increasingly, machine learning is used to improve locomotion and manipulation. Reinforcement learning (RL) allows a robot to learn how to optimize its gait or balance by trial and error, either in simulation or with cautious real-world practice. Some humanoids have neural network policies that take sensor inputs and output joint torques, having been trained to minimize falls. Additionally, imitation learning is used: robots acquire new skills by observing or mimicking human demonstrations. In humanoid robotics, a common approach is to teleoperate the robot to perform a task (guided by a human pilot) and record the data, then use that data to train a policy so the robot can do the task autonomously – essentially learning from demonstration. This has led to the concept of Large Behavior Models in robotics, analogous to large language models, which absorb huge numbers of teleoperated or simulated experience and learn general skills. Sanctuary AI, for instance, emphasizes teleoperating their humanoid to perform diverse activities to gather “an enormous amount of data” – including vision and touch – which is then used to train their AI control system (codenamed Carbon) to have human-like dexterity and decision-making. Through such learning, the AI can grasp the nuances of handling fragile vs. heavy objects, or how to recover if the robot starts to lose balance.

Decision-Making and Autonomy

Beyond low-level movement, AI helps with higher-level decisions. Humanoid robots in complex settings need a form of executive functioning – deciding which task to do first, how to handle unexpected events, and when to ask for help. This may involve planning algorithms that break down a goal into steps and autonomous reasoning about the world state. Some research connects symbolic AI (for reasoning about goals) with the robot’s learned skills. We are also seeing experimentation with combining LLMs with robotic control, where the language model can serve as a high-level planner by reasoning in words about a task and then triggering the robot’s skills (this approach has been tested in research, guiding robots with pseudo-internal monologues or plans generated by an LLM).

Adaptation and Learning On the Fly

True human-level versatility requires robots to continue learning during deployment. Some humanoid robots are now capable of online learning – adjusting their behavior based on new data in real time. They might refine their vision recognition if lighting conditions change, or personalize their interaction style to different users. AI techniques like continual learning and federated learning (aggregating learnings from multiple robots) are being explored to keep humanoids improving without needing frequent re-programming.

Humanoid robot safety

It’s important to note that with greater AI integration comes a need for safety and reliability. Developers incorporate fail-safes so that if the AI produces an uncertain or dangerous action, the robot can default to a safe behavior (for example, freezing movement or handing control back to a human operator). Also, simulation and rigorous testing are used to validate AI models before deploying them in the real world. Despite challenges, the infusion of machine learning has made today’s humanoid robots far more capable than their predecessors. They can handle variability – walking on uneven ground, manipulating objects they haven’t seen before – by drawing on what they’ve learned. In fact, humanoid robots are learning and adapting faster than ever, using AI models to perceive, sense, plan, and act autonomously in real time. As AI algorithms continue to advance (and as more data is collected from robots operating in the field), we can expect humanoids to become even more skilled and autonomous.

Teleoperation and Remote Presence

Despite the progress in autonomy, a significant aspect of humanoid robotics today is teleoperation – that is, a human remotely controlling the robot. Teleoperation provides a practical bridge between current AI capabilities and the complex tasks we want humanoid robots to do. In teleoperated mode, a human operator can step into the robot’s shoes (or rather, circuits) and guide it to perform tasks via a live control link, effectively using the robot as an avatar in a remote or hazardous location.

Humanoid teleoperation can be as simple as using a joystick and screen to drive the robot, or as immersive as wearing a full VR (virtual reality) setup with motion-tracking controllers to literally act through the robot. In recent years, the convergence of humanoid robots with VR interfaces has brought telepresence fantasies closer to reality – some suggest that teleoperating humanoid robots could be the “killer app” that finally gives VR a broad purpose. Using a VR headset and gloves, an operator can see through the robot’s cameras in 3D and move their arms to make the robot’s arms move, achieving high-fidelity control. This allows extremely delicate or complex tasks to be done by the robot with human intelligence fully in the loop.

Why Teleoperate a Humanoid Robot?

- Human Skill on Demand: Instantly apply human problem-solving and dexterity to the robot’s situation. In the near future there may be a period where humanoid robots are deployed widely but still need human teleoperators to handle the trickiest situations or recover from mistakes.

- Training the Robot’s AI: Teleoperation is a powerful way to teach robots new skills. Human-performed demonstrations become training data for learning algorithms (e.g., Large Behavior Models).

- Immediate Utility in Hazardous/Remote Environments: Use robots right now in dangerous or inaccessible scenarios.

- Remote Presence and Service: Provide telepresence for meetings, education, or customer service; one operator could “be” the face of multiple robots.

To enable effective teleoperation, developers focus on intuitive control interfaces and feedback systems (motion capture, exoskeletons, haptics). Low-latency communication is crucial; advances in networking and edge computing help reduce latency. Some systems explore partial autonomy in teleoperation – the human provides high-level guidance while onboard AI ensures stability and safety.

Teleoperation is not a permanent crutch but part of a continuum toward full autonomy. Many real robot demos that appear autonomous are actually teleoperated behind the scenes, often to collect data to improve the AI. Over time, the need for teleoperation should diminish as robots get smarter, but it will remain a valuable fallback in critical applications.

Applications and Use Cases of Humanoid Robots

Humanoid robots have potential uses anywhere we might want a machine to perform human-like tasks or work in human-oriented environments. Because they are designed with human form and capabilities in mind, they are especially suited to jobs that involve the same tools, spaces, or social interactions as human workers. Key application areas include:

Manufacturing and Logistics

Work alongside people on assembly lines, handle parts and tools designed for humans, perform repetitive or strenuous tasks (lifting, positioning, inspections), navigate factory floors, pick/pack in warehouses, unload trucks, and use existing infrastructure (ramps, elevators, doors). Goals include productivity boosts and addressing labor shortages. One example of a robot suitable for this area is the Agility Digit

Healthcare and Caregiving

Assist staff by transporting supplies or medications, disinfecting rooms, basic patient monitoring, potentially lifting/moving patients and assisting in therapy. The aim is to complement workers by taking over mundane or physically demanding duties. One example of a robot suitable for this application is the Neo Gamma by 1X.

Customer Service and Hospitality

Greeters, guides, and concierges in public spaces. Natural interaction via speech and gestures; future roles may include taking orders or giving tours, as language and perception improve. One example of a robot suitable for this application is the Neo Gamma by 1X.

Education and Research

Educational tools for programming/robotics, platforms for embodied AI research, and special-needs support. Competitions (e.g., humanoid soccer) drive progress in perception, balance, and coordination. One example of a robot suitable for this application is the Unitree R1 by Unitree, due to it’s low cost.

Public Safety and Maintenance

Patrolling, inspection, inventory auditing, and janitorial tasks. Versatility allows use of existing tools and navigating human-built spaces. One example of a robot suitable for this area is the Walker S2 by Ubtech Robotics.

Hazardous and Extreme Environments

Disaster response, hazardous industrial sites, deep-sea exploration, and space. Humanoids can operate equipment designed for humans, acting as surrogates in dangerous settings, potentially teleoperated.

Personal and Home Robotics

General-purpose helpers for household chores, assistance for elderly/disabled, integrating voice assistants and personalized AI. Homes are challenging environments, but the payoff is significant. One example of a robot suitable for this application is the Neo Gamma by 1X.

It’s clear that the potential applications are broad. Virtually any environment built for humans could see humanoid robots playing a role. Analysts predict that by 2030-2035, humanoid robots could become a common presence in daily life, working well beyond the confines of factories. Each use case comes with its own requirements, but the core technology is transferable.

Challenges and Considerations

In all applications, challenges include:

- Reliability and Safety: Operating among people requires high safety standards; falls or glitches can be harmful.

- Battery Life: Endurance is limited; many current systems run only an hour or two under active load.

- Cost: High upfront costs require strong business cases; mass production and tech advances may reduce costs over time.

- Human-Robot Interaction: Managing variability and human unpredictability; continued advances in AI and HRI are essential.

Humanoids can take over the “3 D’s” (dirty, dull, dangerous), improving safety and augmenting human labor rather than replacing it. New jobs will emerge (maintenance techs, operators, trainers). Policymakers and companies are working on ensuring complementary collaboration between humans and robots.

Conclusion

“Humanoid robot” is not just a sci-fi concept but an evolving reality. By structuring a robot with a human-like form and equipping it with advanced components, software, and AI, we obtain a machine that can step into many roles traditionally filled by people. As the technology matures, we will see these robots progressively move from laboratories and pilot programs into the real world – in factories, hospitals, homes, and beyond – tackling tasks and solving problems side by side with human coworkers. The journey to ubiquitous humanoid robots is just beginning, but it holds the promise of transforming industries and daily life in profound ways.

Humanoid Robot Market Report 2025/2026

We offer quarterly posters

-

Physical humanoid robot poster (Q3), darkmode

Original price was: $ 60.$ 53Current price is: $ 53. -

Physical humanoid robot poster (Q3), yellow

Original price was: $ 60.$ 53Current price is: $ 53. -

Digital humanoid robot posters 2026 Q1

Original price was: $ 40.$ 30Current price is: $ 30. -

Humanoid robot wallpapers (Q3)

Original price was: $ 20.$ 10Current price is: $ 10.